lightning Lab

1번 문제.

Upgrade the current version of kubernetes from 1.28.0 to 1.29.0 exactly using the kubeadm utility. Make sure that the upgrade is carried out one node at a time starting with the controlplane node. To minimize downtime, the deployment gold-nginx should be rescheduled on an alternate node before upgrading each node.

Upgrade controlplane node first and drain node node01 before upgrading it. Pods for gold-nginx should run on the controlplane node subsequently.

Cluster Upgraded?

pods ‘gold-nginx’ running on controlplane?

현재 버전의 Kubernetes를 1.28.0에서 1.29.0으로 업그레이드하려면 kubeadm 유틸리티를 사용하세요. 업그레이드는 제어 평면 노드부터 시작하여 한 번에 하나의 노드씩 진행되어야 합니다. downtime을 최소화하기 위해 각 노드를 업그레이드하기 전에 gold-nginx 배포가 다른 노드로 재배치되어야 합니다.

제어 평면 노드를 먼저 업그레이드하기 전에 해당 노드인 node01을 비워야 합니다. 그리고 제어 평면 노드에서 실행 중인 gold-nginx 파드를 먼저 옮겨야 합니다.

클러스터를 업그레이드했습니까?

제어 평면 노드에서 실행 중인 gold-nginx 파드는 있습니까?

해설

- node, pod 정보 확인

controlplane ~ ✖ kubectl get nodeNAME STATUS ROLES AGE VERSIONcontrolplane Ready control-plane 35m v1.28.0node01 Ready <none> 35m v1.28.0 controlplane ~ ➜ kubectl get podNAME READY STATUS RESTARTS AGEgold-nginx-5d9489d9cc-mm2mt 1/1 Running 0 10m- kubeadm 버전 확인 (1.28 이다)

controlplane ~ ✖ kubeadm versionkubeadm version: &version.Info{Major:"1", Minor:"28", GitVersion:"v1.28.0", GitCommit:"855e7c48de7388eb330da0f8d9d2394ee818fb8d", GitTreeState:"clean", BuildDate:"2023-08-15T10:20:15Z", GoVersion:"go1.20.7", Compiler:"gc", Platform:"linux/amd64"}- kubeadm 버전 업 (아래 docs 참고)

💡 kubeadm upgrade 라고 검색

https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

[Upgrading kubeadm clusters

This page explains how to upgrade a Kubernetes cluster created with kubeadm from version 1.28.x to version 1.29.x, and from version 1.29.x to 1.29.y (where y > x). Skipping MINOR versions when upgrading is unsupported. For more details, please visit Versio

kubernetes.io](https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/)

apt updateapt-cache madison kubeadm(버전 업데이트 하고 버전 확인)하지만 1.28 밖에 안보인다.

그때는 1.29 버전을 다운 받아서 사용해야 한다. 아래 홈페이지를 참고하자.

💡 kubeadm install 이라고 검색!!

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

[Installing kubeadm

This page shows how to install the kubeadm toolbox. For information on how to create a cluster with kubeadm once you have performed this installation process, see the Creating a cluster with kubeadm page. This installation guide is for Kubernetes v1.29. If

kubernetes.io](https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/)

1.29버전으로 업데이트 한다.

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.29/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.listcontrolplane 을 drain 해주고 update 한 다음 업데이트 가능한 버전을 확인하자.

kubectl drain controlplane --ignore-daemonsetsapt updateapt-cache madison kubeadm1.29.0-1.1 을 쓸 수 있다.

업그레이드

apt-get install kubeadm=1.29.0-1.1kubeadm upgrade plan v1.29.0kubeadm upgrade apply v1.29.0 apt-get install kubelet= 1.29.0-1.1systemctl deamon-reloadsystemctl restart kubeletkubectl uncordon controlplanetaint 를 확인한다

kubectl describe node controlplane | grep -i taint # taint 삭제kubectl taint node controlplane node-role.kubernetes.io/control-plane:NoSchedule- # 제거되었는지 확인kubectl describe node controlplane | grep -i taint- node01 업그레이드

먼저 drain 해주자

kubectl drain node01 --ignore-daemonsetsnode01로 접속

ssh node01똑같이 해준다.

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.29/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list apt updateapt-cache madison kubeadm 그럼 1.29.0-1.1 이 나올것이다. apt-get install kubeadm=1.29.0-1.1kubeadm upgrade node apt-get install kubelet=1.29.0-1.1systemctl daemon-reloadsystemctl restart kubelet이제 controlplane 으로 돌아가서 스케쥴을 풀어준다.

root@controlplane:~# kubectl uncordon node01 # 그리고 최종 확인root@controlplane:~# kubectl get pods -o wide | grep gold2번 문제.

Print the names of all deployments in the ‘admin2406’ namespace in the following format:

DEPLOYMENT CONTAINER_IMAGE READY_REPLICAS NAMESPACE

The data should be sorted by the increasing order of the ‘deployment name’.

Example:

DEPLOYMENT CONTAINER_IMAGE READY_REPLICAS NAMESPACE deploy0 nginx:alpine 1 admin2406

Write the result to the file ‘/opt/admin2406_data’.

주어진 형식에 맞추어 ‘admin2406’ 네임스페이스에 있는 모든 deployments의 이름을 출력하고, deployments에 사용된 컨테이너 이미지와 준비된 레플리카 수도 함께 출력해야 합니다. 결과는 ‘deployment name’의 증가하는 순서대로 정렬되어야 합니다.

먼저 ‘admin2406’ 네임스페이스에 있는 모든 배포를 조회하고 해당 정보를 요구사항에 맞게 형식화하여 출력하는 쿼리를 작성합니다.

그런 다음 이를 파일 ‘/opt/admin2406_data’에 기록합니다.

방법

-

‘admin2406’ 네임스페이스에 있는 모든 배포를 조회합니다.

-

조회된 각 배포의 이름, 사용된 컨테이너 이미지, 준비된 레플리카 수를 가져옵니다.

-

이 정보를 주어진 형식에 맞게 출력합니다.

-

결과를 파일 ‘/opt/admin2406_data’에 저장합니다.

풀이

kubectl get deployment -n admin2406 -o custom-columns=DEPLOYMENT:.metadata.name,CONTAINER_IMAGE:.spec.template.spec.containers[].image,READY_REPLICAS:.status.readyReplicas,NAMESPACE:.metadata.namespace --sort-by=.metadata.name > /opt/admin2406_data참고

https://kubernetes.io/docs/reference/kubectl/#custom-columns

[Command line tool (kubectl)

Production-Grade Container Orchestration

kubernetes.io](https://kubernetes.io/docs/reference/kubectl/#custom-columns)

https://kubernetes.io/docs/reference/kubectl/#sorting-list-objects

[Command line tool (kubectl)

Production-Grade Container Orchestration

kubernetes.io](https://kubernetes.io/docs/reference/kubectl/#sorting-list-objects)

3번 문제

A kubeconfig file called admin.kubeconfig has been created in /root/CKA. There is something wrong with the configuration. Troubleshoot and fix it.

/root/CKA에 admin.kubeconfig라는 kubeconfig 파일이 생성되었습니다.

구성에 문제가 있습니다. 문제를 해결하고 수정하십시오.

풀이

kube-apiserver 포트가 올바른지 확인해야 한다.

cat /root/CKA/admin.kubeconfig포트를 4380에서 6443으로 변경해야한다.

💡 6443 포트는 kubernetes api server 프로세스의 기본 포트

- kubeconfig port 라고 검색해보면 나온다.

4번 문제

Create a new deployment called nginx-deploy, with image nginx:1.16 and 1 replica. Next upgrade the deployment to version 1.17 using rolling update.

이미지 nginx:1.16 및 1개의 복제본으로 nginx-deploy라는 새 deployment를 생성해라. 그리고 롤링 업데이트를 사용하여 배포를 버전 1.17로 업그레이드해라.

풀이

먼저 파일을 만들자. 옵션을 잘 활용하자.

kubectl create deployment nginx-deploy --image=nginx:1.16 --replicas=1 --dry-run=client -o yaml > test.yaml그리고 파일을 실행

kubectl apply -f test.yaml롤링 업데이트 설정

kubectl set image deployment.v1.apps/nginx-deploy nginx=nginx:1.17 또는kubectl set image deployment/nginx-deploy nginx=nginx:1.17참고 : updating deployment

https://kubernetes.io/docs/concepts/workloads/controllers/deployment/

[Deployments

A Deployment manages a set of Pods to run an application workload, usually one that doesn’t maintain state.

kubernetes.io](https://kubernetes.io/docs/concepts/workloads/controllers/deployment/)

5번 문제

A new deployment called alpha-mysql has been deployed in the alpha namespace. However, the pods are not running. Troubleshoot and fix the issue. The deployment should make use of the persistent volume alpha-pv to be mounted at /var/lib/mysql and should use the environment variable MYSQL_ALLOW_EMPTY_PASSWORD=1 to make use of an empty root password.

Important: Do not alter the persistent volume.

alpha namespace에 alpha-mysql라는 새 deployment가 배포되었습니다. 그러나 pods가 실행되고 있지 않습니다. 문제를 해결하고 해결하십시오. deployment는 /var/lib/mysql에 마운트할 영구 볼륨 alpha-pv를 사용해야 하며 빈 루트 암호를 사용하려면 환경 변수 MYSQL_ALLOW_EMPETY_PASSWORD=1을 사용해야 합니다.

중요: 지속적인 볼륨을 변경하지 마십시오.

풀이

[pod 상태 확인]

kubectl get all -n alpha

kubectl describe pod/alpha-mysql-68c6b855bb-ztscr -n alpha

(결과)

원인이 persistentvolumeclaim “mysql-alpha-pvc” not found 임을 확인.

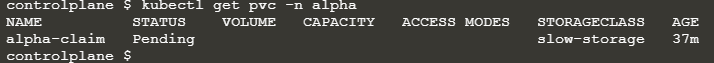

[pvc 확인]

kubectl get pvc -n alpha

mysql-alpha-pvc가 없음.

[pv 확인]

kubectl get pv -n alpha

확인후 어떤 storagecalss 를 사용하는지 확인

kubectl describe pv alpha-pv -n alpha

(결과)

slow storageclass를 사용.

pvc 생성

apiVersion: v1kind: PersistentVolumeClaimmetadata: name: mysql-alpha-pvc namespace: alphaspec: accessModes: - ReadWriteOnce resources: requests: storage: 1Gi storageClassName: slow참고 deployment volume pv

https://kubernetes.io/docs/concepts/storage/persistent-volumes/

[Persistent Volumes

This document describes persistent volumes in Kubernetes. Familiarity with volumes, StorageClasses and VolumeAttributesClasses is suggested. Introduction Managing storage is a distinct problem from managing compute instances. The PersistentVolume subsystem

kubernetes.io](https://kubernetes.io/docs/concepts/storage/persistent-volumes/)

6번 문제

Take the backup of ETCD at the location /opt/etcd-backup.db on the controlplane node.

controlplane node의 /opt/etcd-backup.db 위치에서 ETCD의 백업을 수행합니다.

풀이

[etcd pod이름 확인]

kubectl get all -n kube-system kubectl describe pod/etcd-controlplane -n kube-system백업

ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key snapshot save /opt/etcd-backup.db참고

etcd back 검색

https://kubernetes.io/docs/tasks/administer-cluster/configure-upgrade-etcd/

[Operating etcd clusters for Kubernetes

etcd is a consistent and highly-available key value store used as Kubernetes’ backing store for all cluster data. If your Kubernetes cluster uses etcd as its backing store, make sure you have a back up plan for the data. You can find in-depth information a

kubernetes.io](https://kubernetes.io/docs/tasks/administer-cluster/configure-upgrade-etcd/)

7번 문제

Create a pod called secret-1401 in the admin1401 namespace using the busybox image. The container within the pod should be called secret-admin and should sleep for 4800 seconds.

The container should mount a read-only secret volume called secret-volume at the path /etc/secret-volume. The secret being mounted has already been created for you and is called dotfile-secret.

busybox 이미지를 사용하여 admin1401 네임스페이스에 secret-1401이라는 pod를 생성합니다. pod 내의 컨테이너는 secret-admin이라고 해야 하며 4800초 동안 sleep 해야 한다.

컨테이너는 /etc/secret-volume 경로에 secret-volume이라는 읽기 전용 secret volume을 마운트해야 합니다. 마운트 중인 secret은 이미 생성되었으며 dotfile-secret이라고 합니다.

풀이

kubectl run secret-1401 -n admin1401 --image=busybox --dry-run=client -o yaml --command -- sleep 4800 > admin.yaml그리고나서 수정해야 한다.

sleep 4800 , mount 경로

apiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: secret-1401 name: secret-1401 namespace: admin1401spec: volumes: - name: secret-volume # secret volume secret: secretName: dotfile-secret containers: - command: - sleep - "4800" image: busybox name: secret-admin # volumes' mount path volumeMounts: - name: secret-volume readOnly: true mountPath: "/etc/secret-volume"생성

kubectl apply -f admin.yaml -n admin1401참고 : create pod secret

https://kubernetes.io/docs/concepts/configuration/secret/

[Secrets

A Secret is an object that contains a small amount of sensitive data such as a password, a token, or a key. Such information might otherwise be put in a Pod specification or in a container image. Using a Secret means that you don’t need to include confiden

kubernetes.io](https://kubernetes.io/docs/concepts/configuration/secret/)

Mock Exam 1

1번 문제

Deploy a pod named nginx-pod using the nginx:alpine image.

nginx:alpine 이미지를 사용하여 nginx-pod라는 이름의 파드를 배포하십시오

풀이

kubectl run nginx-pod --image=nginx:alpine 또는 파일을 생성하려면kubectl run ngix-pod --image=nginx:alpine --dry-run=client -o yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: ngix-pod name: ngix-podspec: containers: - image: nginx:alpine name: ngix-pod resources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}2번 문제

Deploy a messaging pod using the redis:alpine image with the labels set to tier=msg.

label을 tier=msg로 설정하여 redis:alpine 이미지를 사용하여 messaging 파드를 배포하십시오

풀이

controlplane ~ ➜ kubectl run messaging --image=redis:alpine --labels=tier=msg --dry-run=client -o yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels: tier: msg name: messagingspec: containers: - image: redis:alpine name: messaging resources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {} controlplane ~ ➜ kubectl run messaging --image=redis:alpine --labels=tier=msgpod/messaging created3번 문제

Create a namespace named apx-x9984574.

apx-x9984574 라는 이름의 namespace를 만들어라

풀이

kubectl create namespace apx-x99845744번 문제

Get the list of nodes in JSON format and store it in a file at /opt/outputs/nodes-z3444kd9.json.

노드 목록을 JSON 형식으로 가져와 /opt/outputs/nodes-z3444kd9.json 파일에 저장하십시오.

풀이

kubectl get nodes -o json > /opt/outputs/nodes-z3444kd9.json5번문제 (주의)

Create a service messaging-service to expose the messaging application within the cluster on port 6379.

messaging-service 라는 서비스를 만들고 messaging 이라는 Pod에 expose해라. 포트는 6379로 설정해라

풀이

controlplane ~ ✖ kubectl expose pod messaging --port=6379 --name=messaging-service --type=ClusterIPservice/messaging-service exposed controlplane ~ ➜ kubectl get serviceNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 88mmessaging-service ClusterIP 10.97.164.241 <none> 6379/TCP 6s참고

https://kubernetes.io/docs/reference/kubectl/generated/kubectl_expose/

[kubectl expose

Production-Grade Container Orchestration

kubernetes.io](https://kubernetes.io/docs/reference/kubectl/generated/kubectl_expose/)

6번 문제

Create a deployment named hr-web-app using the image kodekloud/webapp-color with 2 replicas.

이미지 kodekloud/webapp-color를 사용하여 2개의 레플리카를 가지는 hr-web-app이라는 deployment를 생성해라

풀이

controlplane ~ ✖ kubectl create deployment hr-web-app --image=kodekloud/webapp-color --replicas=2 --dry-run=client -o yamlapiVersion: apps/v1kind: Deploymentmetadata: creationTimestamp: null labels: app: hr-web-app name: hr-web-appspec: replicas: 2 selector: matchLabels: app: hr-web-app strategy: {} template: metadata: creationTimestamp: null labels: app: hr-web-app spec: containers: - image: kodekloud/webapp-color name: webapp-color resources: {}status: {} controlplane ~ ➜ kubectl create deployment hr-web-app --image=kodekloud/webapp-color --replicas=2 deployment.apps/hr-web-app created7번 문제 (정적 pod → 위치 중요)

Create a static pod named static-busybox on the controlplane node that uses the busybox image and the command sleep 1000.

controlplane 노드에 busybox 이미지와 sleep 1000 명령을 사용하는 static-busybox라는 pod를 생성해라

풀이

kubectl run static-busybox --image=busybox --dry-run=client -oyaml --command -- sleep 1000 > /etc/kubernetes/manifests/static-web.yaml 위치 중요하다. # yaml 파일 생성controlplane ~ ➜ kubectl run static-busybox --image=busybox --dry-run=client -o yaml --command -- sleep 1000apiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: static-busybox name: static-busyboxspec: containers: - command: - sleep - "1000" image: busybox name: static-busybox resources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}8번 문제

Create a POD in the finance namespace named temp-bus with the image redis:alpine.

finance 네임스페이스에 redis:alpine 이미지를 사용하여 temp-bus라는 이름의 pod를 생성해라

풀이

kubectl run temp-bus -n finance --image=redis:alpine # yaml 파일 생성controlplane ~ ➜ kubectl run temp-bus -n finance --image=redis:alpine --dry-run=client -o yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: temp-bus name: temp-bus namespace: financespec: containers: - image: redis:alpine name: temp-bus resources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}9번 문제 (log 확인방법)

A new application orange is deployed. There is something wrong with it. Identify and fix the issue.

새로운 애플리케이션인 orange가 배포되었습니다. 그러나 뭔가 잘못되었습니다. 문제를 식별하고 수정하십시오.

풀이

먼저 오류 확인

controlplane ~ ➜ k logs orange Defaulted container "orange-container" out of: orange-container, init-myservice (init)Error from server (BadRequest): container "orange-container" in pod "orange" is waiting to start: PodInitializing오류를 보면 실행이 안된다. 초기화에 문제가 있을 가능성이 높다.

로그를 확인

controlplane ~ ✖ k logs orange init-myservice sh: sleeeep: not found # init-myservice 는 초기 설정 컨테이너 이다.이제 이걸 해결해보자

먼저 yaml 파일로 만들고, 수정한 뒤, 기존 pod 를 삭제하고 재 배포

kubectl get pod orange -o yaml > orange.yaml sleeeeep 2; 를 sleep 2 로 변경 kubectl replace --force -f orange.yaml # 결과controlplane ~ ✖ kubectl get podNAME READY STATUS RESTARTS AGEorange 1/1 Running 0 34s10번 문제

Expose the hr-web-app as service hr-web-app-service application on port 30082 on the nodes on the cluster.

The web application listens on port 8080.

hr-web-app을 클러스터의 노드들에 대해 포트 30082에서 hr-web-app-service 애플리케이션의 서비스로 노출해라.

웹 애플리케이션은 포트 8080에서 수신 대기합니다.

풀이

controlplane ~ ➜ kubectl expose deployment hr-web-app --name=hr-web-app-service --port=8080 --type=NodePort --dry-run=client -o yaml > hrservice.yaml controlplane ~ ➜ lshrservice.yaml orange.yaml sample.yaml controlplane ~ ➜ vi hrservice.yaml controlplane ~ ➜ cat hrservice.yaml apiVersion: v1kind: Servicemetadata: creationTimestamp: null labels: app: hr-web-app name: hr-web-app-servicespec: ports: - port: 8080 protocol: TCP nodePort: 30082 # 이렇게 변경 selector: app: hr-web-app type: NodePortstatus: loadBalancer: {} controlplane ~ ➜ kubectl apply -f hrservice.yaml service/hr-web-app-service created controlplane ~ ➜ kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEhr-web-app-service NodePort 10.109.115.236 <none> 8080:30082/TCP 5s문제 11번 (주의)

Use JSON PATH query to retrieve the osImages of all the nodes and store it in a file /opt/outputs/nodes_os_x43kj56.txt.

The osImages are under the nodeInfo section under status of each node.

JSON PATH 를 사용해서 모든 노드의 osImages를 가져와서 /opt/outputs/nodes_os_x43kj56.txt 파일에 저장해라

각 노드의 상태(status) 아래에 있는 nodeInfo 섹션에 osImages가 있습니다.

풀이

controlplane ~ ➜ kubectl get nodes -o=jsonpath='{.items[0].status.nodeInfo.osImage}' > /opt/outputs/nodes_os_x43kj56.txt controlplane ~ ➜ cat /opt/outputs/nodes_os_x43kj56.txtUbuntu 22.04.3 LTS참고

https://kubernetes.io/docs/reference/kubectl/jsonpath/

[JSONPath Support

Kubectl supports JSONPath template. JSONPath template is composed of JSONPath expressions enclosed by curly braces {}. Kubectl uses JSONPath expressions to filter on specific fields in the JSON object and format the output. In addition to the original JSON

kubernetes.io](https://kubernetes.io/docs/reference/kubectl/jsonpath/)

문제 12번

Create a Persistent Volume with the given specification

Volume name: pv-analytics

Storage: 100Mi

Access mode: ReadWriteMany

Host path: /pv/data-analytics

주어진 사양에 따라 Persistent Volume을 생성해라.

풀이

controlplane ~ ➜ cat pv.yaml apiVersion: v1kind: PersistentVolumemetadata: name: pv-analyticsspec: capacity: storage: 100Mi accessModes: - ReadWriteMany hostPath: path: "/pv/data-analytics"참고

https://kubernetes.io/docs/tasks/configure-pod-container/configure-persistent-volume-storage/

[Configure a Pod to Use a PersistentVolume for Storage

This page shows you how to configure a Pod to use a PersistentVolumeClaim for storage. Here is a summary of the process: You, as cluster administrator, create a PersistentVolume backed by physical storage. You do not associate the volume with any Pod. You,

kubernetes.io](https://kubernetes.io/docs/tasks/configure-pod-container/configure-persistent-volume-storage/)

Mock Exam 2

문제 1번

Take a backup of the etcd cluster and save it to /opt/etcd-backup.db.

etcd 클러스터의 백업을 가져와서 /opt/etcd-backup.db에 저장해라

풀이

# etcd 파일 확인cat /etc/kubernetes/manifests/etcd.yaml # backup 저장ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key snapshot save /opt/etcd-backup.db참고

https://kubernetes.io/docs/tasks/administer-cluster/configure-upgrade-etcd/

[Operating etcd clusters for Kubernetes

etcd is a consistent and highly-available key value store used as Kubernetes’ backing store for all cluster data. If your Kubernetes cluster uses etcd as its backing store, make sure you have a back up plan for the data. You can find in-depth information a

kubernetes.io](https://kubernetes.io/docs/tasks/administer-cluster/configure-upgrade-etcd/)

문제 2번

Create a Pod called redis-storage with image: redis:alpine with a Volume of type emptyDir that lasts for the life of the Pod.

Specs on the below.

Pod named ‘redis-storage’ created

Pod ‘redis-storage’ uses Volume type of emptyDir

Pod ‘redis-storage’ uses volumeMount with mountPath = /data/redis

redis:alpine 이미지를 사용하며 Pod의 수명 동안 지속되는 emptyDir 유형의 Volume을 가진 pod를 만들어라

풀이

controlplane ~ ➜ cat redis.yaml apiVersion: v1kind: Podmetadata: name: redis-storagespec: containers: - name: redis image: redis:alpine volumeMounts: - name: redis-storage mountPath: /data/redis volumes: - name: redis-storage emptyDir: {}참고 (pod with volume)

https://kubernetes.io/docs/tasks/configure-pod-container/configure-volume-storage/

[Configure a Pod to Use a Volume for Storage

This page shows how to configure a Pod to use a Volume for storage. A Container’s file system lives only as long as the Container does. So when a Container terminates and restarts, filesystem changes are lost. For more consistent storage that is independen

kubernetes.io](https://kubernetes.io/docs/tasks/configure-pod-container/configure-volume-storage/)

문제 3번

Create a new pod called super-user-pod with image busybox:1.28. Allow the pod to be able to set system_time.

The container should sleep for 4800 seconds.

이미지 busybox:1.28을 사용하여 super-user-od라는 새로운 파드를 생성하십시오. 파드가 system_time을 설정할 수 있도록 허용하십시오.

컨테이너는 4800초 동안 sleep해야 합니다.

Pod: super-user-pod Container

Image: busybox:1.28

Is SYS_TIME capability set for the container?

풀이

# yaml 파일로 작성kubectl run super-user-pod --image=busybox:1.28 --dry-run=client -o yaml --command -- sleep 4800 > sys.yaml # vi 로 편집apiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: super-user-pod name: super-user-podspec: containers: - command: - sleep - "4800" image: busybox:1.28 name: super-user-pod resources: {} securityContext: capabilities: add: ["SYS_TIME"] dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}참고 (pod allow system time 검색)

https://kubernetes.io/docs/tasks/configure-pod-container/security-context/

[Configure a Security Context for a Pod or Container

A security context defines privilege and access control settings for a Pod or Container. Security context settings include, but are not limited to: Discretionary Access Control: Permission to access an object, like a file, is based on user ID (UID) and gro

kubernetes.io](https://kubernetes.io/docs/tasks/configure-pod-container/security-context/)

문제 4번

A pod definition file is created at /root/CKA/use-pv.yaml. Make use of this manifest file and mount the persistent volume called pv-1. Ensure the pod is running and the PV is bound.

mountPath: /data

persistentVolumeClaim Name: my-pvc

persistentVolume Claim configured correctly

pod using the correct mountPath

pod using the persistent volume claim?

/root/CKA/use-v.yaml 파일을 사용하여 파드를 생성하고 pv-1이라는 persistent volume을 마운트하십시오.

파드가 실행되고 PV가 bound되었는지 확인하십시오.

마운트 경로: /data

지속적인 볼륨 클레임 이름: my-pvc

풀이

현재 상태

controlplane ~ ➜ cat /root/CKA/use-pv.yaml apiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: use-pv name: use-pvspec: containers: - image: nginx name: use-pv resources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {} controlplane ~ ➜ kubectl get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGEpv-1 10Mi RWO Retain Available <unset> 2m28s controlplane ~ ➜ kubectl get pvcNo resources found in default namespace.pvc가 없다. my-pvc를 만들자

pv가 10Mi 이므로 pvc 도 10Mi로 생성한다.

controlplane ~ ➜ vi mypvc.yaml controlplane ~ ➜ cat mypvc.yaml apiVersion: v1kind: PersistentVolumeClaimmetadata: name: my-pvcspec: accessModes: - ReadWriteOnce resources: requests: storage: 10Mi/root/CKA/use-pv.yaml 수정

controlplane ~ ➜ vi /root/CKA/use-pv.yaml controlplane ~ ➜ cat /root/CKA/use-pv.yaml apiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: use-pv name: use-pvspec: containers: - image: nginx name: use-pv volumeMounts: - mountPath: "/data" name: mypd volumes: - name: mypd persistentVolumeClaim: claimName: my-pvc controlplane ~ ➜ k apply -f /root/CKA/use-pv.yaml pod/use-pv created참고 (pod pv)

https://kubernetes.io/docs/tasks/configure-pod-container/configure-persistent-volume-storage/

[Configure a Pod to Use a PersistentVolume for Storage

This page shows you how to configure a Pod to use a PersistentVolumeClaim for storage. Here is a summary of the process: You, as cluster administrator, create a PersistentVolume backed by physical storage. You do not associate the volume with any Pod. You,

kubernetes.io](https://kubernetes.io/docs/tasks/configure-pod-container/configure-persistent-volume-storage/)

5번 문제

Create a new deployment called nginx-eploy, with image nginx:1.16 and 1 replica. Next upgrade the deployment to version 1.17 using rolling update.

Deployment : nginx-deploy. Image: nginx:1.16

Image: nginx:1.16

Task: Upgrade the version of the deployment to 1:17

Task: Record the changes for the image upgrade

이름이 ‘nginx-deploy’인 deployment를 생성하십시오. 이미지는 nginx:1.16이고 레플리카는 1개입니다.

다음으로 롤링 업데이트를 사용하여 배포를 버전 1.17로 업그레이드하십시오.

배포: nginx-deploy. 이미지: nginx:1.16

이미지: nginx:1.16

작업: 배포의 버전을 1.17로 업그레이드하십시오.

작업: 이미지 업그레이드에 대한 변경 사항을 기록하십시오.

풀이

deployment 생성

controlplane ~ ➜ kubectl create deployment nginx-deploy --image=nginx:1.16 --replicas=1 deployment.apps/nginx-deploy createdrolling update 생성

controlplane ~ ➜ kubectl set image deployment/nginx-deploy nginx=nginx:1.17deployment.apps/nginx-deploy image updated6번 문제

Create a new user called john. Grant him access to the cluster. John should have permission to create, list, get, update and delete pods in the development namespace . The private key exists in the location: /root/CKA/john.key and csr at /root/CKA/john.csr.

Important Note: As of kubernetes 1.19, the CertificateSigningRequest object expects a signerName.

Please refer the documentation to see an example. The documentation tab is available at the top right of terminal.

CSR: john-eveloper Status:Approved

Role Name: developer, namespace: development, Resource: Pods

Access: User ‘john’ has appropriate permissions

새로운 사용자인 john을 만드십시오. john에게 클러스터 액세스 권한을 부여하십시오.

John은 development 네임스페이스에서 pod를 생성, 목록, 가져오기, 업데이트 및 삭제할 수 있어야 합니다.

개인 키는 /root/CKA/john.key에 있고 csr은 /root/CKA/john.csr에 있습니다.

중요한 참고 사항: 쿠버네티스 1.19부터 CertificateSigningRequest 객체는 signerName 가 필요합니다.

예제를 보려면 문서를 참조하십시오.

문서 탭은 터미널 오른쪽 상단에 있습니다.

CSR: john-developer 상태: 승인됨

역할 이름: 개발자,

네임스페이스: 개발,

리소스: 포드 액세스

사용자 ‘john’에는 적절한 권한이 있습니다.

풀이

일단 개인 키는 /root/CKA/john.key에 있고 csr은 /root/CKA/john.csr에 있다.

controlplane ~ ➜ cat /root/CKA/john.key-----BEGIN PRIVATE KEY-----MIIEvgIBADANBgkqhkiG9w0BAQEFAASCBKgwggSkAgEAAoIBAQCvy+08gPV3eW2t.....i05kCsihj0DtNoQS9+fWGCdW1aI64YXVNknNSUeW/vImNkOTfgTfJK7J/y4Uwv20NYy1c/dSe9zDu6SIbT9P/85+-----END PRIVATE KEY----- controlplane ~ ➜ cat /root/CKA/john.csr-----BEGIN CERTIFICATE REQUEST-----MIICVDCCATwCAQAwDzENMAsGA1UEAwwEam9objCCASIwDQYJKoZIhvcNAQEBBQAD.....jBiGcu9894jH7YhkAMzxNYy9Tkv44sSO-----END CERTIFICATE REQUEST------ 먼저 CSR(CertificateSigningRequest) 을 만들어라.

참조

[Certificates and Certificate Signing Requests

Kubernetes certificate and trust bundle APIs enable automation of X.509 credential provisioning by providing a programmatic interface for clients of the Kubernetes API to request and obtain X.509 certificates from a Certificate Authority (CA). There is als

kubernetes.io](https://kubernetes.io/docs/reference/access-authn-authz/certificate-signing-requests/#create-certificatesigningrequest)

아래가 기본 틀이다

cat <<EOF | kubectl apply -f -apiVersion: certificates.k8s.io/v1kind: CertificateSigningRequestmetadata: name: myuserspec: request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ1ZqQ0NBVDRDQVFBd0VURVBNQTBHQTFVRUF3d0dZVzVuWld4aE1JSUJJakFOQmdrcWhraUc5dzBCQVFFRgpBQU9DQVE4QU1JSUJDZ0tDQVFFQTByczhJTHRHdTYxakx2dHhWTTJSVlRWMDNHWlJTWWw0dWluVWo4RElaWjBOCnR2MUZtRVFSd3VoaUZsOFEzcWl0Qm0wMUFSMkNJVXBGd2ZzSjZ4MXF3ckJzVkhZbGlBNVhwRVpZM3ExcGswSDQKM3Z3aGJlK1o2MVNrVHF5SVBYUUwrTWM5T1Nsbm0xb0R2N0NtSkZNMUlMRVI3QTVGZnZKOEdFRjJ6dHBoaUlFMwpub1dtdHNZb3JuT2wzc2lHQ2ZGZzR4Zmd4eW8ybmlneFNVekl1bXNnVm9PM2ttT0x1RVF6cXpkakJ3TFJXbWlECklmMXBMWnoyalVnald4UkhCM1gyWnVVV1d1T09PZnpXM01LaE8ybHEvZi9DdS8wYk83c0x0MCt3U2ZMSU91TFcKcW90blZtRmxMMytqTy82WDNDKzBERHk5aUtwbXJjVDBnWGZLemE1dHJRSURBUUFCb0FBd0RRWUpLb1pJaHZjTgpBUUVMQlFBRGdnRUJBR05WdmVIOGR4ZzNvK21VeVRkbmFjVmQ1N24zSkExdnZEU1JWREkyQTZ1eXN3ZFp1L1BVCkkwZXpZWFV0RVNnSk1IRmQycVVNMjNuNVJsSXJ3R0xuUXFISUh5VStWWHhsdnZsRnpNOVpEWllSTmU3QlJvYXgKQVlEdUI5STZXT3FYbkFvczFqRmxNUG5NbFpqdU5kSGxpT1BjTU1oNndLaTZzZFhpVStHYTJ2RUVLY01jSVUyRgpvU2djUWdMYTk0aEpacGk3ZnNMdm1OQUxoT045UHdNMGM1dVJVejV4T0dGMUtCbWRSeEgvbUNOS2JKYjFRQm1HCkkwYitEUEdaTktXTU0xMzhIQXdoV0tkNjVoVHdYOWl4V3ZHMkh4TG1WQzg0L1BHT0tWQW9FNkpsYWFHdTlQVmkKdjlOSjVaZlZrcXdCd0hKbzZXdk9xVlA3SVFjZmg3d0drWm89Ci0tLS0tRU5EIENFUlRJRklDQVRFIFJFUVVFU1QtLS0tLQo= signerName: kubernetes.io/kube-apiserver-client expirationSeconds: 86400 # one day usages: - client authEOF이걸 복사해서 csr.yaml 파일을 만들자.

이때 중요한건 request = CSR 파일 콘텐츠의 base64 인코딩 값을 넣어야 된다.

cat /root/CKA/john.csr | base64 | tr -d "\n"이제 그 값을 통해 csr.yaml 을 만들자.

vi john-csr.yaml apiVersion: certificates.k8s.io/v1kind: CertificateSigningRequestmetadata: name: john-developerspec: request: # 인코딩된 값 넣기 signerName: kubernetes.io/kube-apiserver-client usages: - client auth # 중간에 예약부분은 삭제 kubectl create -f john-scr.yaml확인

controlplane ~ ➜ k get csrNAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITIONcsr-5xmld 55m kubernetes.io/kube-apiserver-client-kubelet system:node:controlplane <none> Approved,Issuedcsr-v5j9p 55m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:z54adw <none> Approved,Issuedjohn-developer 12s kubernetes.io/kube-apiserver-client kubernetes-admin <none> Pending아직 pending 상태인 것을 볼 수 있다. 이를 승인해주자.

- CertificateSigningRequest 승인

kubectl certificate approve john-developer- Role 생성

# creat Role 찾기kubectl create role --help # Create a role named "foo" with SubResource specified kubectl create role foo --verb=get,list,watch --resource=pods,pods/status kubectl create role developer --verb=create,get,list,update,delete --resource=pods -n development확인

controlplane ~ ➜ kubectl get role -n development NAME CREATED ATdeveloper 2024-03-25T11:32:42Z controlplane ~ ✖ k describe role -n development Name: developerLabels: <none>Annotations: <none>PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- pods [] [] [create get list update delete]- 역할 binding (역할과 사용자 등 매칭)

그 전에 auth 를 사용해서 확인하자. auth 명령은 해당 사용자가 뭘 할 수 있는지 확인하는 명령어다.

kubectl auth can-i get pods --namespace=development --as johnno kubectl auth can-i create pods --namespace=development --as johnnorole binding 을 만들어보자

kubectl create rolebinding john-developer --role=developer --user=john -n development # 확인kubectl get rolebinding.rbac.authorization.k8s.io -n development kubectl describe rolebinding.rbac.authorization.k8s.io -n development kubectl auth can-i get pods --namespace=development --as john kubectl auth can-i create pods --namespace=development --as john kubectl auth can-i watch pods --namespace=development --as john7번 문제

Create an nginx pod called nginx-resolver using image nginx, expose it internally with a service called nginx-resolver-service. Test that you are able to look up the service and pod names from within the cluster. Use the image: busybox:1.28 for dns lookup. Record results in /root/CKA/nginx.svc and /root/CKA/nginx.pod

Pod: nginx-resolver created

Service DNS Resolution recorded correctly

Pod DNS resolution recorded correctly

nginx 이미지를 사용하여 nginx-resolver라는 이름의 nginx pod를 생성하고 내부적으로 nginx-resolver-service라는 서비스를 노출합니다. 클러스터 내에서 서비스 및 pod 이름을 조회할 수 있는지 확인합니다. dns 조회에는 busybox:1.28 이미지를 사용합니다. 결과는 /root/CKA/nginx.svc 및 /root/CKA/nginx.pod에 기록합니다.

Pod: nginx-resolver가 생성되었습니다.

서비스 DNS 해결이 올바르게 기록되었습니다.

Pod DNS 해결이 올바르게 기록되었습니다.

풀이

- 먼저 pod 를 생성

kubectl run nginx-resolver --image=nginx- 이 pod를 expose 해서 nginx-resolver-service로 노출

kubectl expose pod nginx-resolver --name=nginx-resolver-service --port=80 --target-port=80 --type=ClusterIP# nginx 는 80번 포트다.- “busybox”라는 이름의 파드에서 “nslookup” 명령을 사용하여 “nginx-resolver-service” 서비스의 DNS를 조회

먼저 생성

kubectl run busybox --image=busybox:1.28 -- sleep 4000확인

kubectl exec busybox -- nslookup nginx-resolver-service # "exec" 명령은 Kubernetes에서 실행 중인 컨테이너 내부에서 명령을 실행하는 데 사용된다. # 이 명령을 사용하면 컨테이너 내에서 프로세스를 실행하고 출력을 확인할 수 있다 kubectl exec busybox --nslookup nginx-resolver-service > /root/CKA/nginx.svc- “busybox”라는 이름의 파드에서 “nslookup” 명령을 사용하여 “nginx-resolver-service” pod의 DNS를 조회

일단 pods 를 보면 nignx-resolver의 ip를 보고 확인할 수 있다.

controlplane ~ ➜ k get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESbusybox 1/1 Running 0 65s 10.244.192.5 node01 <none> <none>nginx-deploy-555dd78576-zl2kp 1/1 Running 0 17m 10.244.192.4 node01 <none> <none>nginx-resolver 1/1 Running 0 2m25s 10.244.192.3 node01 <none> <none>redis-storage 1/1 Running 0 21m 10.244.192.1 node01 <none> <none>super-user-pod 1/1 Running 0 20m 10.244.192.2 node01 <none> <none>use-pv 0/1 Pending 0 18m <none> <none> <none> <none>확인

controlplane ~ ✖ kubectl exec busybox -- nslookup 10-244-192-4.default.pod.cluster.localServer: 10.96.0.10Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local Name: 10-244-192-4.default.pod.cluster.localAddress 1: 10.244.192.4 controlplane ~ ➜ kubectl exec busybox -- nslookup 10-244-192-4.default.pod > /root/CKA/nginx.pod참고 (dns)

https://kubernetes.io/docs/concepts/services-networking/dns-pod-service/

[DNS for Services and Pods

Your workload can discover Services within your cluster using DNS; this page explains how that works.

kubernetes.io](https://kubernetes.io/docs/concepts/services-networking/dns-pod-service/)

8번 문제

Create a static pod on node01 called nginx-critical with image nginx. Create this pod on node01 and make sure that it is recreated/restarted automatically in case of a failure.

Use /etc/kubernetes/manifests as the Static Pod path for example.

static pod configured under /etc/kubernetes/manifests?

Pod nginx-critical-node01 is up and running

node01에 nginx 이미지를 사용하여 nginx-critical이라는 정적 파드를 생성하십시오. 이 파드를 node01에 생성하고, 장애 발생 시 자동으로 다시 생성/재시작되도록합니다.

예를 들어, /etc/kubernetes/manifests를 정적 파드 경로로 사용할 수 있습니다.

정적 파드가 /etc/kubernetes/manifests에 구성되었습니까?

nginx-critical-node01 파드가 실행 중입니다.

풀이

- 먼저 node01에 들어가보자.

ontrolplane ~ ➜ k get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIMEcontrolplane Ready control-plane 42m v1.29.0 192.22.127.9 <none> Ubuntu 22.04.3 LTS 5.4.0-1106-gcp containerd://1.6.26node01 Ready <none> 41m v1.29.0 192.22.127.12 <none> Ubuntu 22.04.3 LTS 5.4.0-1106-gcp containerd://1.6.26 controlplane ~ ➜ ssh 192.22.127.12- 새터미널로 마스터 노드에서 pod를 생성해보자 (kubectl 이 안되서)

# yaml 파일 생성kubectl run nginx-critical --image=nginx --restart=Always --dry-run=client -o yaml # 다시 node01node01 ~ ➜ cat> /etc/kubernetes/manifests/nginx-critical.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: nginx-critical name: nginx-criticalspec: containers: - image: nginx name: nginx-critical resources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}^C node01 ~ ✖ cat /etc/kubernetes/manifests/nginx-critical.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: nginx-critical name: nginx-criticalspec: containers: - image: nginx name: nginx-critical resources: {} dnsPolicy: ClusterFirst restartPolicy: Always- 파일을 쓰자마자 pod 가 생성된 것을 볼 수 있다.

controlplane ~ ➜ kubectl get podsNAME READY STATUS RESTARTS AGEnginx-critical-node01 1/1 Running 0 28sMock EXAM 3

1번 문제

Create a new service account with the name pvviewer. Grant this Service account access to list all PersistentVolumes in the cluster by creating an appropriate cluster role called pvviewer-role and ClusterRoleBinding called pvviewer-role-binding.

Next, create a pod called pvviewer with the image: redis and serviceAccount: pvviewer in the default namespace.

ServiceAccount: pvviewer

ClusterRole: pvviewer-role

ClusterRoleBinding: pvviewer-role-binding

Pod: pvviewer

Pod configured to use ServiceAccount pvviewer ?

새로운 서비스 계정을 pvviewer로 생성하고, 이 서비스 계정이 클러스터 내 모든 PersistentVolumes를 나열할 수 있도록 pvviewer-role이라는 적절한 Cluster Role과 pvviewer-role-binding이라는 ClusterRoleBinding을 생성해주세요.

다음으로, default 네임스페이스에 이미지가 redis이고 serviceAccount가 pvviewer인 pvviewer라는 이름의 파드를 생성해주세요.

풀이

- 먼저 service account 를 생성한다.

controlplane ~ ➜ kubectl create serviceaccount pvviewerserviceaccount/pvviewer created controlplane ~ ➜ k get saNAME SECRETS AGEdefault 0 42mpvviewer 0 114s- Cluster Role 을 생성한다.

생각해봐야할게 access to list all PersistentVolumes in the cluster 를 할 수 있어야 된다.

controlplane ~ ➜ k create clusterrole --helpCreate a cluster role. Examples: # Create a cluster role named "pod-reader" that allows user to perform "get", "watch" and "list" on pods kubectl create clusterrole pod-reader --verb=get,list,watch --resource=pods—resource 에 무엇을 넣어야 할까?

바로 PersistentVolumes 이다.

리소스가 있는지 확인해보자.

controlplane ~ ➜ k api-resources | grep persistentpersistentvolumeclaims pvc v1 true PersistentVolumeClaimpersistentvolumes pv v1 false PersistentVolumeCluster Role 을 생성하자.

controlplane ~ ➜ k create clusterrole pvviewer-role --verb=list --resource=persistentvolumesclusterrole.rbac.authorization.k8s.io/pvviewer-role created # 확인controlplane ~ ➜ k describe clusterrole pvviewer-role Name: pvviewer-roleLabels: <none>Annotations: <none>PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- persistentvolumes [] [] [list]- ClusterRoleBinding 을 생성한다.

controlplane ~ ➜ k create clusterrolebinding --helpCreate a cluster role binding for a particular role or cluster role. Examples: # Create a role binding for serviceaccount monitoring:sa-dev using the admin role kubectl create rolebinding admin-binding --role=admin --serviceaccount=monitoring:sa-dev controlplane ~ ✖ k create clusterrolebinding pvviewer-role-binding --clusterrole=pvviewer-role --serviceaccount=default:pvviewerclusterrolebinding.rbac.authorization.k8s.io/pvviewer-role-binding created확인

controlplane ~ ➜ k get clusterrolebindings.rbac.authorization.k8s.io NAME ROLE AGEpvviewer-role-binding Role/pvviewer-role 11s controlplane ~ ➜ k describe clusterrolebindings.rbac.authorization.k8s.io pvviewer-role-binding Name: pvviewer-role-bindingLabels: <none>Annotations: <none>Role: Kind: ClusterRole Name: pvviewer-roleSubjects: Kind Name Namespace ---- ---- --------- ServiceAccount pvviewer default- pvviewer pod 생성

controlplane ~ ➜ k run pvviewer --image=redis --dry-run=client -o yaml > test.yaml controlplane ~ ➜ vi test.yaml controlplane ~ ➜ cat test.yaml apiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: pvviewer name: pvviewerspec: serviceAccountName: pvviewer #추가 containers: - image: redis name: pvviewer resources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}2번 문제 (source internalip 검색)

List the InternalIP of all nodes of the cluster. Save the result to a file /root/CKA/node_ips.

Answer should be in the format: InternalIP of controlplane

클러스터의 모든 노드의 InternalIP를 나열하고 그 결과를 /root/CKA/node_ips 파일에 저장해야 합니다.

결과는 다음과 같은 형식으로 저장됩니다: controlplane의 InternalIP 노드의 InternalIP (한 줄에 모두 표시)

풀이 (get InternalIP 로 검색)

kubectl get nodes -o jsonpath='{.items[*].status.addresses[?(@.type=="InternalIP")].address}' > /root/CKA/node_ips참고

https://kubernetes.io/docs/tutorials/services/source-ip/

[Using Source IP

Applications running in a Kubernetes cluster find and communicate with each other, and the outside world, through the Service abstraction. This document explains what happens to the source IP of packets sent to different types of Services, and how you can

kubernetes.io](https://kubernetes.io/docs/tutorials/services/source-ip/)

3번 문제

Create a pod called multi-pod with two containers.

Container 1: name: alpha, image: nginx

Container 2: name: beta, image: busybox, command: sleep 4800

Environment Variables:

container 1:

name: alpha

container 2:

name: beta

2개의 container로 구성된 multi-pod 이라는 po를 만들어라.

Container 1: name: alpha, image: nginx

Container 2: name: beta, image: busybox, command: sleep 4800

환경변수

container 1:

name: alpha

Container 2:

name: beta

풀이

k run multi-pod --image=busybox --dry-run=client -o yaml --command -- sleep 4800 > multi.yaml # 편집controlplane ~ ➜ vi multi.yaml controlplane ~ ➜ cat multi.yaml apiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: multi-pod name: multi-podspec: containers: - command: - sleep - "4800" image: busybox name: beta env: - name: name value: beta - image: nginx name: alpha env: - name: name value: alpha4번 문제 (securty context 검색)

Create a Pod called non-root-pod , image: redis:alpine

runAsUser: 1000

fsGroup: 2000

non-root-pod 라는 이름의 pod를 생성해라. image는 redis:alpine 을 사용해라

runAsUser: 1000

fsGroup: 2000

풀이

k run non-root-pod --image=redis:alpine -o yaml > non-pod.yamldocs 에서 아래 참고

apiVersion: v1kind: Podmetadata: name: security-context-demospec: securityContext: runAsUser: 1000 runAsGroup: 3000 fsGroup: 2000yaml 파일 편집

controlplane ~ ➜ cat non-pod.yaml apiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: non-root-pod name: non-root-podspec: securityContext: runAsUser: 1000 fsGroup: 2000 containers: - image: redis:alpine name: non-root-pod resources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}생성

kubectl apply -f non-root-pod.yaml참고

https://kubernetes.io/docs/tasks/configure-pod-container/security-context/

[Configure a Security Context for a Pod or Container

A security context defines privilege and access control settings for a Pod or Container. Security context settings include, but are not limited to: Discretionary Access Control: Permission to access an object, like a file, is based on user ID (UID) and gro

kubernetes.io](https://kubernetes.io/docs/tasks/configure-pod-container/security-context/)

5번 문제 (NetworkPolicy 검색)

We have deployed a new pod called np-test-1 and a service called np-test-service. Incoming connections to this service are not working. Troubleshoot and fix it.

Create NetworkPolicy, by the name ingress-to-nptest that allows incoming connections to the service over port 80.

Important: Don’t delete any current objects deployed.

np-test-1이라는 새로운 pod와 np-test-service라는 서비스를 배포했습니다. 이 서비스에 들어오는 연결이 작동하지 않습니다. 문제를 해결하십시오.

포트 80을 통해 서비스에 대한 수신 연결을 허용하는 ingress-to-npt 테스트라는 이름 NetworkPolicy를 만드세요.

중요: 현재 배포된 개체를 삭제하지 마십시오.

풀이

controlplane ~ ➜ k get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 43mnp-test-service ClusterIP 10.103.224.59 <none> 80/TCP 3m56s controlplane ~ ➜ k describe svc np-test-service Name: np-test-serviceNamespace: defaultLabels: run=np-test-1Annotations: <none>Selector: run=np-test-1Type: ClusterIPIP Family Policy: SingleStackIP Families: IPv4IP: 10.103.224.59IPs: 10.103.224.59Port: <unset> 80/TCPTargetPort: 80/TCPEndpoints: 10.244.192.4:80Session Affinity: NoneEvents: <none> controlplane ~ ➜ k get networkpolicies.networking.k8s.io NAME POD-SELECTOR AGEdefault-deny <none> 4m14s controlplane ~ ➜ k describe networkpolicies.networking.k8s.io default-deny Name: default-denyNamespace: defaultCreated on: 2024-03-27 11:00:42 +0000 UTCLabels: <none>Annotations: <none>Spec: PodSelector: <none> (Allowing the specific traffic to all pods in this namespace) Allowing ingress traffic: <none> (Selected pods are isolated for ingress connectivity) Not affecting egress traffic Policy Types: Ingress확인해보면 PodSelector가 지정되지 않았기 때문에 모든 인그레스 트래픽이 거부 인 것을 알 수 있다.

새로운 port 80이 허용된 network policy를 만들자.

먼저 pod를 확인해서 어떤 pod에 연결해야 하는지 확인.

controlplane ~ ➜ kubectl get pod np-test-1 NAME READY STATUS RESTARTS AGEnp-test-1 1/1 Running 0 8m32s controlplane ~ ➜ k describe pod np-test-1 Name: np-test-1Namespace: defaultPriority: 0Service Account: defaultNode: node01/192.21.213.6Start Time: Wed, 27 Mar 2024 11:00:41 +0000Labels: run=np-test-1....기존의 networkpolicy 를 활용해서 새로운 policy 생성

controlplane ~ ➜ k get networkpolicies.networking.k8s.io default-deny -o yaml > netpol.yaml controlplane ~ ➜ vi netpol.yaml controlplane ~ ➜ cat netpol.yaml apiVersion: networking.k8s.io/v1kind: NetworkPolicymetadata: name: ingress-to-nptest namespace: defaultspec: podSelector: matchLabels: run: np-test-1 policyTypes: - Ingress ingress: - ports: - protocol: TCP port: 80 controlplane ~ ➜ k apply -f netpol.yaml만들어진 network policy를 테스트 하기위해 임의의 Pod를 실행시키고, Pod 안에서 연결테스트.

controlplane ~ ✖ k run test-np --image=busybox:1.28 --rm -it -- /bin/shIf you don't see a command prompt, try pressing enter./ # nc -z -v -w 2 np-test-service 80np-test-service (10.103.224.59:80) open/ ## nc는 netcat -z는 연결시 바로 닫는 옵션, -v는 verbose, -w 2 는 timeout 시간을 2초로.

참고

https://kubernetes.io/docs/concepts/services-networking/network-policies/

[Network Policies

If you want to control traffic flow at the IP address or port level (OSI layer 3 or 4), NetworkPolicies allow you to specify rules for traffic flow within your cluster, and also between Pods and the outside world. Your cluster must use a network plugin tha

kubernetes.io](https://kubernetes.io/docs/concepts/services-networking/network-policies/)

6번 문제

Taint the worker node node01 to be Unschedulable. Once done, create a pod called dev-redis, image redis:alpine, to ensure workloads are not scheduled to this worker node. Finally, create a new pod called prod-redis and image: redis:alpine with toleration to be scheduled on node01.

key: env_type, value: production, operator: Equal and effect: NoSchedule

worker 노드 node01에 Unschedulable 태인트를 추가하세요. 그 후에 이미지를 redis:alpine로 설정해서 dev-redis라는 이름의 파드를 생성하고 , 해당 파드가 worker node에 생성되지 않도록 설정하세요. 마지막으로 prod-redis라는 이름의 새로운 파드를 생성하고 이미지를 redis:alpine로 설정하되, 이 파드가 node01에 예약될 수 있도록 toleration을 추가하세요.

허용 조건: 키: env_type, 값: production, 연산자: Equal, 효과: NoSchedule

풀이

- 노드에 taint 설정하기

controlplane ~ ➜ k taint --helpExamples: # Update node 'foo' with a taint with key 'dedicated' and value 'special-user' and effect 'NoSchedule' # If a taint with that key and effect already exists, its value is replaced as specified kubectl taint nodes foo dedicated=special-user:NoSchedule controlplane ~ ➜ k taint nodes node01 env_type=production:NoSchedulenode/node01 tainted # taint 생성 확인controlplane ~ ➜ k describe node node01 | grep -i taintTaints: env_type=production:NoSchedule- pod 생성하기

먼저 dev-redis

현재 node01 노드에는 “env_type=production:NoSchedule”이라는 테인트(taint)가 설정되어 있기 때문에 일반적인 방법으로는 해당 노드에 파드를 생성할 수 없다

controlplane ~ ➜ k run dev-redis --image=redis:alpine pod/dev-redis createdprod-redis 생성하자

controlplane ~ ➜ kubectl run prod-redis --image=redis:alpine --dry-run=client -o yaml > prod.yaml controlplane ~ ➜ vi prod.yaml # prod-redis 생성을 위한# docs 참고tolerations:- key: "key1" operator: "Equal" value: "value1" effect: "NoSchedule" controlplane ~ ➜ cat prod.yaml apiVersion: v1kind: Podmetadata: creationTimestamp: null labels: run: prod-redis name: prod-redisspec: containers: - image: redis:alpine name: prod-redis resources: {} tolerations: - effect: NoSchedule key: env_type operator: Equal value: production dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {} controlplane ~ ➜ k apply -f prod.yaml pod/prod-redis created controlplane ~ ➜ k get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESprod-redis 1/1 Running 0 6s 10.244.192.5 node01 <none> <none>참고

https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/

[Taints and Tolerations

Node affinity is a property of Pods that attracts them to a set of nodes (either as a preference or a hard requirement). Taints are the opposite — they allow a node to repel a set of pods. Tolerations are applied to pods. Tolerations allow the scheduler t

kubernetes.io](https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/)

문제 7번 (pod labels 검색)

Create a pod called hr-pod in hr namespace belonging to the production environment and frontend tier.

image: redis:alpine

Use appropriate labels and create all the required objects if it does not exist in the system already.

hr 네임스페이스에 속하고 production environmen 및 frontend tier 에 속하는 hr-pod라는 이름의 파드를 생성하고, 이미 해당하는 오브젝트들이 시스템에 존재하지 않는 경우 필요한 모든 오브젝트를 함께 생성하세요.

파드의 이미지는 redis:alpine를 사용하세요.

풀이

# namespace 생성k create namespace hr # 확인k get nslabel 을 적용한 pod 생성

kubectl run hr-pod --image=redis:alpine --namespace=hr --labels=environment=production,tier=frontend # 확인k get pod -n hr k describe pod hr-pod -n hr참고

https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/

[Labels and Selectors

Labels are key/value pairs that are attached to objects such as Pods. Labels are intended to be used to specify identifying attributes of objects that are meaningful and relevant to users, but do not directly imply semantics to the core system. Labels can

kubernetes.io](https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/)

문제 8번 (kubeconfig port 검색해보면 6443 port 들이 보인다.)

A kubeconfig file called super.kubeconfig has been created under /root/CKA. There is something wrong with the configuration. Troubleshoot and fix it.

/root/CKA 디렉토리 아래 super.kubeconfig라는 이름의 kubeconfig 파일이 생성되었습니다. 이 구성에 문제가 있습니다. 문제를 해결하고 수정해주세요.

풀이

kubectl cluster-info --kubeconfig=/root/CKA/super.kubeconfig # 확인해보면 포트가 9999로 되어 있는것을 확인 6443으로 고쳐준다.문제 9번

We have created a new deployment called nginx-deploy. scale the deployment to 3 replicas. Has the replica’s increased? Troubleshoot the issue and fix it.

새로운 deployment인 nginx-deploy를 생성했습니다. 이 디플로이먼트의 레플리카를 3개로 확장해주세요. 레플리카가 증가했는지 확인하세요. 문제를 해결하고 수정해주세요.

풀이

controlplane ~ ➜ kubectl get podNAME READY STATUS RESTARTS AGEmulti-pod 1/1 Running 0 12mnginx-deploy-56d8fc648f-trrhn 1/1 Running 0 8m43snp-test-1 1/1 Running 0 12m controlplane ~ ➜ kubectl get deployments.apps NAME READY UP-TO-DATE AVAILABLE AGEnginx-deploy 1/1 1 1 8m46s controlplane ~ ➜ k scale deployment nginx-deploy --replicas=3deployment.apps/nginx-deploy scaled controlplane ~ ➜ k get deployments.apps NAME READY UP-TO-DATE AVAILABLE AGEnginx-deploy 1/3 1 1 9m7s하지만 수정 되지 않는다.

controlplane ~ ➜ k get pod -n kube-system NAME READY STATUS RESTARTS AGE...kube-contro1ler-manager-controlplane 0/1 ImagePullBackOff 0 44s # kube-controller-manager Pod가 ImagePullBackOff 상태로 걸려있는것 확인. $ cd /etc/kubernetes/manifest $ vi kube-contoller-manager.yaml # 잘 보면 contoller 부분이 conro1ler로 알파벳 l 하나가 숫자 1로 잘못 적혀있는것을 확인 할 수 있다. # 총 5개의 contol1er를 고쳐주면 되는것으로 보인다.